I’m preparing to record a book I’ve written. I’m using a Blue Snowball Mic, Voicemeeter and Audacity on a Windows 10 OS. I’m using my walk-in closet (to avoid background noise) as a makeshift studio. My gut is telling me I’m okay, since I can play the sample file I’ve recorded through my desktop system set at the same volume I normally use while at my computer, and the voice quality sounds pleasant.

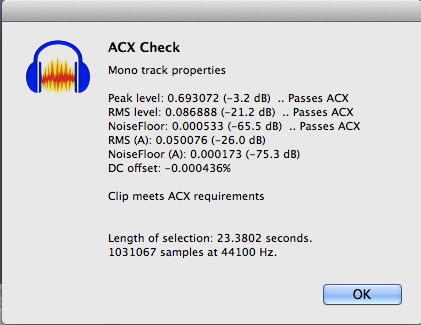

I’m confused by instructions in the ACX guide “Each file must measure between -23dB and -18dB RMS.” I want to be sure I’m recording close to that so it won’t end up overprocessed after I’ve put in all that effort recording 100,000 words. The problem is that I don’t know how to read what I’m seeing in Audacity as I record. I’ve attached a screenshot of a bit of the recording’s waveform on Audacity.

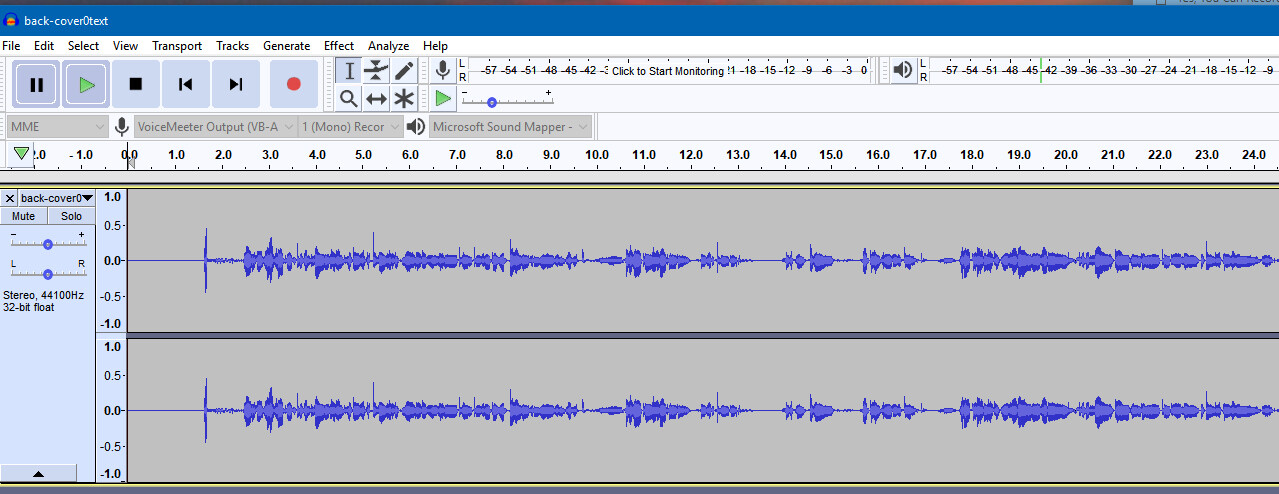

I’m seeing the bulk of the recording being at .25 to -.25. Spikes go no further than .5.

What does this mean relative to the ACX guide calling for -23dB and -18dB RMS?

I am new to all this jargon and trying to catch up as quickly as I can so I can get this job done. Hopefully, I don’t need a degree in audio recording to figure all this out adequately to accomplish this task.

Thanks for any help.