I’m going to be recording some vinyl LPs using a 16-bit USB audio interface (it does have a knob controlling the level that enters its ADC). Apart from noise concerns, is it true that recording at a higher level will give a more accurate recording than at a lower level? The example below made me think so:

– Will use 16 bit in the example: 16 bit has 2^16 baskets of amplitude = 65536, which covers -1 to 0 to +1 on the Audacity linear scale. For simplicity consider only the positive part of the signal, which is covered by half that number, so 32768 baskets of amplitude.

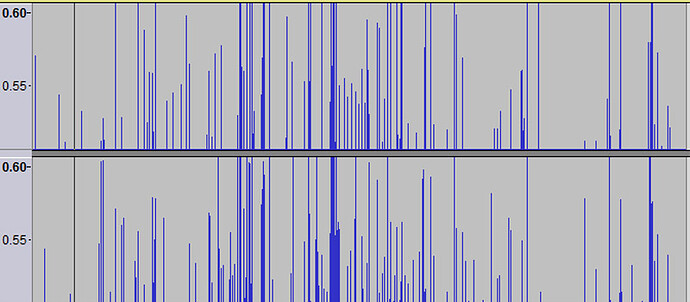

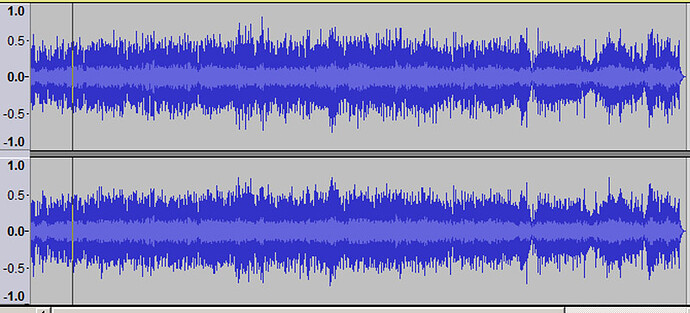

– The example: an analog signal with a positive peak at +0.3 on the linear scale (assume negative peak -0.3), being converted to digital with 16 bits would be documented by 0.332768 = 9830 baskets of amplitude. However if that analog signal were first amplified to peak at +0.9, then it would be documented by 0.932768 = 29491 baskets of amplitude. So it would seem that tripling the analog signal before entering the ADC would document the same wave with three times the baskets of amplitude, or three times the fineness.

I initially thought 9830 baskets still seemed like a large number, but then remembered reading that some of the very first CDs were digitized with 14 bits and often sounded bad. 14-bit has 2^14 = 16384 baskets of amplitude, with the positive part of the signal covered by half that number, so 8192, which is close to the lower-level 16-bit example of 9830.

So APART from noise concerns, is it true that recording at a higher level will :

- Give a more accurate recording than a lower level?

- With 16-bit, lower the risk of sounding poorly like the 14-bit CDs did?

(I know using 20, 24, or 32 bit would make these questions irrelevant, but I’m limited to 16. Also, I’m very interested in the answers for technical understanding.)

Thank you for sharing your expertise.