which one do we hear?

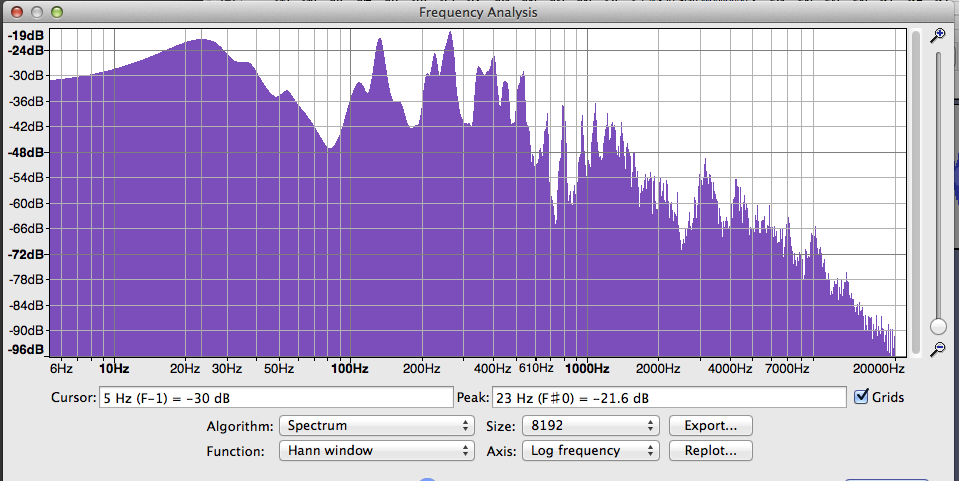

There is no match up exactly. Voices are an insane collection of different tones. This is an analysis of a spoken word or two. Each of those blue peaks represents a different tone roughly labeled by the numbers along the bottom. Many thousands of them.

But the more interesting answer is we would hear the voice, but possibly not the words. The brain is good at turning trash into meaning. Three people listening to the same distorted voice will hear different things. Also see: zodiac signs. Obviously, those three stars look like an archer, right?

Does it get louder?

That depends on the sounds. There is a movement to stop using plain waveform size—RMS—for measuring loudness and start using LUFS which takes into account your ear working much better at some tones than others. See: baby screaming on a jet and fingernails on blackboard.

does our intellect fill in

Yes. Your ear works in the subjuntive. It’s the words that would have been there had they been clearer. That also gives you the hilarity of getting people to tell you what they think song lyrics are just by listening.

What about if our voice and the noise are different in phase by 180 degrees, would everything go silent?

Yes. Or as silent as the sound channel allows. Make an exact copy of some sound track > Control+D (duplicate). Select one and invert it. Effect > Invert. Listen to both of them together and system reverts to electronic background noise.

There is an adapter cable I call the Devil’s Adapter.

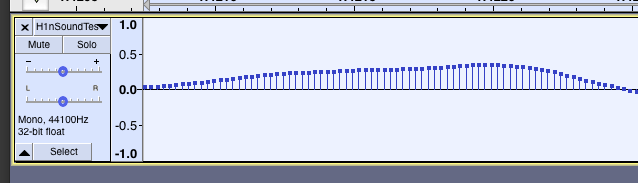

It suggests you can easily connect an XLR microphone to a computer soundcard. What it actually does is create a stereo show (two blue waves) with your voice exactly out of phase left to right. It only sounds a little weird if you’re listening in stereo (voices coming from behind you) but when played on a mono system which tries to mix them, the voice vanishes. So of the four clients that got your show, one of them claims to have received a dead silent track. …!!! That last one is listening on a mono speaker system, a stereo system that accidentally mixed to mono, or their phone.

If you work on the forum enough, you get to recognize the magic. “What kind of microphone are you using and how do you have it connected?”

Sound cancellation also fails in the wrong places. You can’t make one person sing the same song twice, invert one, and have it cancel. You also can’t cancel out an alert tone by casually recording it twice. Nice try, though.

individual samples in each case?

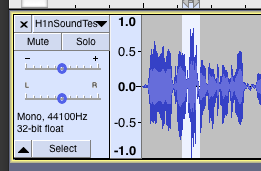

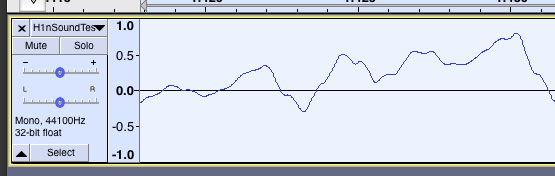

Digital throws some interesting curve balls into the system. The digital system assigns numbers to the analog wave. You can see the assignments if you magnify an Audacity show enough.

Audacity works internally at a super high quality bit depth to keep distortion down when you’re doing effects. The problem comes when you have to make a normal sound track in the world you and I live in. There can be conversion errors, so Audacity very slightly scrambles things so the errors don’t line up and become audible.

This gives another common forum post. A scientist wants to know why their sound experiment didn’t work out exactly as theory would have it. Because they don’t have the sound file they think they do. Audacity will always go in the direction of a good sounding show, not one that’s scientifically perfect.

Audacity can’t split a mixed show apart into individual voices, instruments, and sounds. Correct me, but that’s your problem.

Koz