So - when opening an audio file in audacity (or any other software which allows spectrogram),

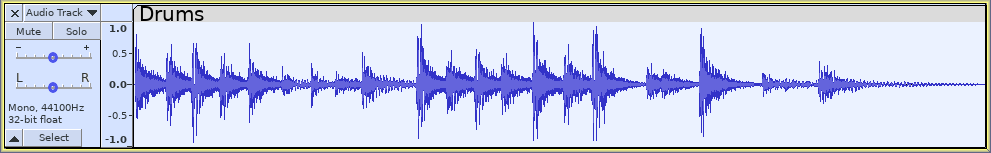

it instantly converts the sound into the 2D graph

Now let’s say the background audio is mostly silent

And the only sound that is being heard from time to time is DRUM BEATS, so every time a drum beat is heard, it is CLEARLY nicely visible in the spectrogram.

It just fills it up in lots of “frequency ranges” and then drops off back into silence.

My question is:

Would there be a way to code something that could tell apart WHEN each of those sounds starts?

and then - be able to COUNT it?

so for example - silence - DRUM BEAT - the millisecond that the spectrogram shows a drumbeat-like pattern - then BOOM, recognize it, say: in this exact millisecond, a drumbeat was heard.

count as one.

so the goal of the first question is:

would it be possible to create a program that could COUNT the number of drumbeats heard in an audio file?

(i realize this may be possible without needing spectrogram but I think it’s a much better way to visualize it, and might be easier)

///

Second question:

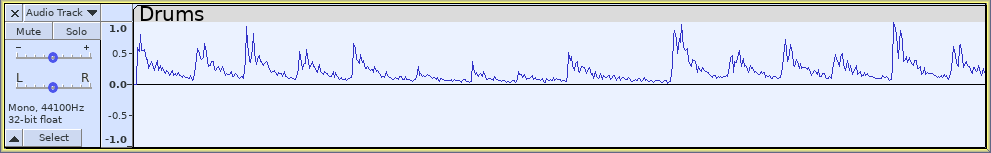

if a drumbeat is heard, the sound “lingers on” for a moment before fading into silence

if there were to be a SECOND drumbeat during the “drop-off” period, then would it be possible to teach the program to recognize it?

what if both sounds were super close together? 300ms apart? 200ms apart? 100ms apart? 50ms apart?

what are the limitations that one would face when trying to code something like this, if even possible?

apologies if I didn’t explain it in the best way possible, but I really am curious if I could get something like this done

hopefully someone can enlighten me on it, so I could hire a programmer to get it done!