I’m pleased about that - I hate it when we have to try and break it to people that their damage recording is irreparable, especially when it is for them a really important recording.

Sound engineers go to great lengths to avoid certain problems with their original recorded material. One is to avoid clipping and the other is to avoid excessive echo / reverberation. The reason that they take such pains to avoid these two particular problems is because they are notoriously difficult to repair satisfactorily.

If there’s just a small amount of clipping from the tops of a few peaks, then this can be repaired reasonably well, and ClipFix does a reasonably good job with that. If the audio is severely distorted, then it’s time to start again. I’ve had the opportunity to use some very expensive sound restoration equipment, and on very slightly clipped audio the equipment performed brilliantly, but with badly distorted audio the results were worse than ClipFix, (and sounded worse than the original “un-fixed” audio).

I’ll try and give an idea of the problem. In these two tests, the numbers represent sample values, but the values have been “clipped” at 9.

Correct the sequence test 1.

1, 2, 0, 4, 7, 9, 9 , 9, 8, 7, 6, 7, 8,

Correct the sequence test 2.

0, 5, 9, 9, 9, 9, 4, 5, 4,

In test 1, there is a pretty good chance that the middle “9” should actually be a “10”.

In test 2, we really have no idea what any of the 9’s should be. Do we guess at 9, 12, 11, 9? Can we be sure that any of the 9s are correct?

ClipFix works by looking at the “slope” either side of the clipped region, so in the case of test 1, it can calculate that the middle “9” is probably lower than it should be, and will replace that value with a higher value. In the case of test 2, there are big increases between the first three numbers, so ClipFix will assume that the next digit should also be substantially higher, but in reality there is no way of knowing what it should have been. To complicate the matter further, if the “clipping” has occurred in the analogue domain, the peak values are probably highly compressed before the clip limit is reached, so even if the clipped region is perfectly restored, there will still be substantial distortion due to the values below the clip threshold being wrong. Complicating the matter even further, clipping is most likely to occur during suddenly high “transients”, for example a drum hit. In this case, the harmonic content of the clipped region will have virtually no relation to the harmonic content of the sound immediately before the drum hit.

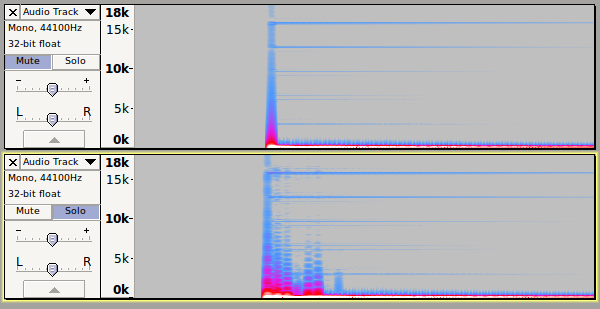

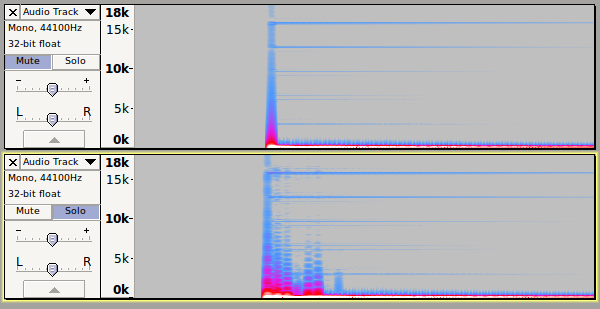

Here are a couple of spectrograms of a low pitch drum being struck. The upper track is a clean recording and the lower track is slightly clipped. We can see that on the second tracks there are a series of broadband pulses after the initial strike. How is an automatic repair algorithm to know if those frequencies should be present or not?