I made a recordng but had the Mic Gain up too high. When the volume produced by the singers exceeded a certain level distortion was added by the recorder.

Is there any way this can be removed?

I made a recordng but had the Mic Gain up too high. When the volume produced by the singers exceeded a certain level distortion was added by the recorder.

Is there any way this can be removed?

There is a Declipper tool (“effect”) that you can try. (I’m not sure where I got it… It might be one of the [u]LADSPA Plug-Ins[/u].)

There are other de-clipping and clip repair tools too, but none of them are perfect. Clipping (distortion) happens when you hit a limit and the top (and bottom) wave peaks become flat-topped. In this case you clipped your analog-to-digital converter during recording. The problem is, information is permanently lost and there is simply no way to know the shape or height of the original waveform.

Really kind. Thanks for the info; and the explanation which will help me next time.

On the hunt now for a declipper. There isn’t one in my Audacity 2.0.2 set-up that I can see.

Audacity has an effect called “Clip Fix” which is below the dividing line in the Effects menu. It’s as good as most other clip repair tools but the general rule is that if the clipping is severe enough to hear, then it’s probably too severe to repair effectively.

Audacity can “see” the clipping but if I use clipfix it either removes so much that the soundtrack is affected or not enough so that the clipping remains.

I suppose removing a “clip” removed whatever was recorded at the same instant?

It can’t reinstate because it doesn’t/can’t know what to put back?

That’s exactly it.

ClipFix detects where the waveform has been cut off and attempts to reconstruct the missing part by extrapolating the waveform from just before to just after the “missing” part. It is “guessing” what the waveform might have been like before it was clipped. If the clipping is only very minor, then it can guess quite well and do a good job. The more that is missing the harder it is for ClipFix to guess and the less effective the repair is.

You might try zooming in to the most conspicuous spikes of distortion and either lowering their volume with the “Amplify” tool; or, if they appear briefly, cut them out and extend the truncated note by cutting and pasting the signal immediately before or after the cut part. If the edit causes “pop” artifact, try to perform the edit on, say, a drum beat to mask the artifact. The latter option is labor intensive and time-consuming. The best solution would be to re-record the track if possible. Some editing artifact can be ameliorated by attenuating the frequency with the equalizer.

See number two.

– The Four Horsemen of Audio Recording (reliable, time-tested ways to kill your show)

– 1. Echoes and room reverberation (Don’t record the show in your mom’s kitchen.)

– 2. Overload and Clipping (Sound that’s too loud is permanently trashed.)

– 3. Compression Damage (Never do production in MP3.)

– 4. Background Sound (Don’t leave the TV on in the next room.)

The Clip Fix tools actually do a credible job assuming the damage is not too severe, but gentle distortion is never a reason to post on a forum. Posting happens when your show is already trashed.

Yes there are things that you can do if it’s a once in a lifetime recording and you have infinite patience, including “drawing in” the waveform with the Draw tool, then smoothing it out with the Repair tool, but unless you have the original Watergate tapes it’s probably not worth the effort…“Ameliorated”, what a lovely word ![]()

Basic problem is you can’t record/film and perform at the same time. I need a better monkey!

All in all, the video recorder only cost £159 so I can’t ask for too much. But learning enormously as I go here. Thanks to everyone for their help and contributions.

PS “Some editing artifact can be ameliorated by attenuating the frequency with the equalizer” is a rather beautiful sentence; especially for a “techie” oriented person. I particularly like the use of “artifact” rather than the more tempting “artifacts”. Delightful use of English.

OK. Now that’s settled can I address one thing I have never understood about sound?

Pure sound - like the concert hall “take your seats” A - is pretty uninteresting and the ear is drawn to harmonies (and unresolved sequences like the leading 7th).

Harmony means more than one frequency being played or heard at the same time but I’ve never understood how this can be.

The ear and a loudspeaker cone are single planar (2 dimensional effectively) surfaces and can only vibrate at a single freqency at a time otherwise they would tear or break up. A magnet driving a loudspeaker can only resonate at a single frequency at a time so how do we get harmonies?

Is it an illusion where each frequency we detect in fact follows the preceding one at millisecond intervals and the brain adds them together? A bit like sampling say, where so many samples are taken and then “played back” all at once fooling the brain into thinking they’ve been played together?

(If sound does indeed work like this then clipping should be removable by finding the right samples to get rid off?)

If the multiple frequencies are really heard simultaneously how does that work? Wouldn’t they just add up to a different frequency? And physically, how can a microphone diaphram, an ear drum or a loudspeaker vibrate at multiple frequencies at the same time without as I say tearing or breaking up?

When I look at the sound trace in Audacity I see a horizontal sequence of spikes of varying heights which I think represent amplitude or volume or sound pressure? I know that within each spike there appears to be a number of frequencies which my ear hears simultaneoulsy giving the harmonic effect humans find so pleasing. But how does it happen?

This has troubled me since school days and physics classes. It would be nice to get an answer.

The eardrum is essentially 2 dimensional planar, but the inner ear is a complex 3 dimensional form. Eardrum - Wikipedia

A loudspeaker cone does not, or should not vibrate at its resonant frequency. For a well designed loudspeaker the resonant frequency of the speaker cone will be below the operating range of the speaker. Using various mechanisms, the speaker unit is “damped” to prevent the cone from resonating at its primary resonant frequency - this is important to prevent the speaker from “booming” at the resonant frequency.

Rather than “resonating”, the speaker is designed to “follow” as accurately as possible the signal that is driving it, which is usually not a single frequency, but a complex waveform made up of many frequencies superimposed on each other. Similarly the eardrum “follows” the complex waveform that is transmitted through the air.

The eardrum is coupled to the inner ear, where tuned receptors called “hair cells” within the cochlea respond to the multiple frequencies within the sound and send signals through the auditory nerve to the brain, where “hearing” occurs.

It’s the “complex waveform made up of many frequencies superimposed on each other” bit I don’t understand.

How can a single physical device - like an eardrum or loudspeaker - vibrate at several frequencies, all at the same time?

If the frequencies occur at the identical same moment why don’t they just add up to another frequency?

If we could see the wave form there would be a single moving crest not lots of different crests all moving at the same time?

There are billions of molecules in air so I can understand multiple streams of them arriving at the ear drum or microphone diaphram each stream resonating separately at a different frequency.

But why don’t they bump into each other and knock each other off frequency?

I assume that the vocal chords can only vibrate at a single frequency at any given moment but the note they produce is then corrupted by the various cavities and physical structures in the body which produces a mix of frequencies.

These frequencies will be supportive because they all came from/are variations on the original pure note or single frequency but when other voices and instruments are added we must surely end up with lots of vibrating molecule streams all banging into each other?

And, and this is the crux of my non-understanding, how then does a single physical surface capture or reproduce them (the ones that survive)?

You’re asking difficult questions, but I’ll do my best to explain ![]()

When we talk about a “frequency”, we are referring to a simple oscillating “cycle” known as a “sine tone”. The “Tone Generator” in the Audacity Generate menu creates “sine tones” by default. If you generate a sine tone and zoom in close on it you will see something like this:

In the “Analyze” menu there is a tool called “Plot Spectrum”.

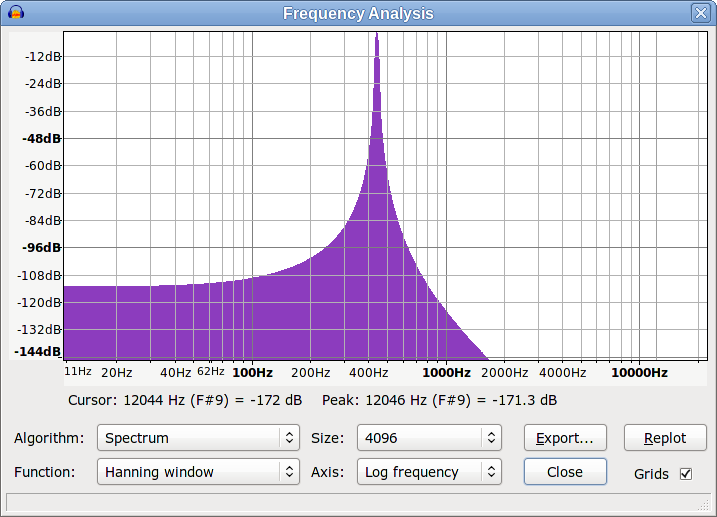

With the “Axis” set to “Log Frequency” you should see something like this:

The “spike” indicates the single tone frequency. Theoretically it should just be a single vertical line, but due to limitations of the frequency analysis the spike appears to spread out at the bottom - that is just a limitation of the tool - with a bit of tweaking of the Spectrum settings you can get a more accurate plot. This is the same audio, but it shows the single frequency a bit more accurately:

Now try generating a “Square No Alias” tone.

Select the Tone Generator and in the “Waveform” selection choose “Square No Alias”.

Play the tone and you will hear that it sounds much “harsher” than the Sine tone, though the “note” (the fundamental frequency) is the same (by default it is 440 Hz “A”.)

Zoom in close and you will see that the shape of the waveform is quite different - it looks “square”:

Why does it sound so different, but still the same “note”?

If we look at “Plot Spectrum” we can see that the “fundamental” frequency (the biggest, lowest spike) is the same frequency as for the sine tone, but there are many other frequencies present also. These other frequencies are “harmonics” and give the sound its different timbre.

Does that help at all?

That’s a lot of work; really appreciate it.

But doesn’t it just confirm that the sounds we typically hear in music are made up up of lots of frequencies combining somehow? Which is my starting point.

If in a single heard or listened to instant there are multiple frequencies generated each carried on their own resonating or vibrating stream of molecules how does a single solid surface like a loudspeaker cone reproduce them?

I understand about the hairs in the ear touching, or almost, the eardrum, each one tuned to a different set of frequencies but the ear drum has to (re)produce those different frequencies to make the individual hairs react. How does it do that? All at the same time?

How does one, solid microphone diaphram send the electrical equivalent of multiple streams of vibrating air molecules down a single wire? At the same time?

The loudspeaker / eardrum are not responding to multiple separate streams, but to the combined effect of those frequencies, so they are responding to the waveform (as shown graphically in the track) rather than to the individual frequencies (as shown in the spectrum).

In the inner ear, the hair cells respond to the individual frequencies (as shown in the spectrum) with different hair cells “tuned” to respond to different frequencies.