Note that I described two ways to avoid aliasing.

“Oversampling” (using a much higher sample rate and then converting back down to a ‘normal’ sample rate) is one way. This works because the very high sample rate is able to handle much higher frequencies so no aliasing there, then downsampling (using anti-aliasing filters) removes the excessively high frequencies before converting. This approach is the only option if you can’t limit the frequency range before converting from analog to digital.

The other approach is to limit the frequency bandwidth of the generated signal. That’s how the chirp using “Square, no alias” manages to avoid aliasing. The frequency range of the sound being generated is limited to less than half the sample rate.

From this Wikipedia article: Aliasing - Wikipedia

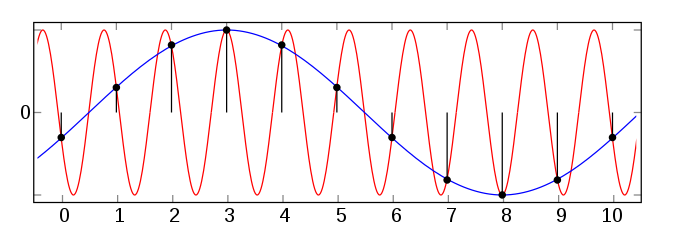

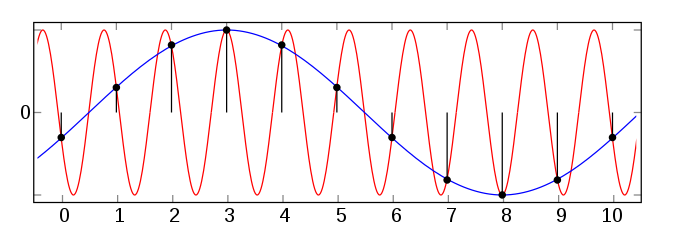

This image helps to explain how aliasing occurs:

We can see that the above set of sample points can fit with two different sine waves. However, the red wave has a frequency that is higher than half the sample rate. The Nyquist Shannon theorem tells us that for any “band limited” signal, there is and can be only one (analog) signal that fits the dots. If we fed the red analog waveform directly into an A/D converter (analog to digital) and the sample rate on the digital side was as shown, then on playback (converting from digital to analog) we would hear the blue waveform. To prevent that from occurring, both A/D and D/A converters limit the bandwidth on the analog side to less than half the sample rate on the digital side. Thus, in all cases, there is only ever one possible waveform that will fit the sample values.

Here’s an experiment that shows what “bad things” can happen when synthesizing in the digital domain:

- Set the Project Rate (bottom left corner of the Audacity window) to 88200.

- Generate 4 tracks with sine waves at the following frequencies: 2000, 3000, 20000, 30000 (in this track order)

- Combine the first two tracks (2000 and 3000) into one stereo track (Splitting and Joining Stereo Tracks - Audacity Manual)

- Combine the second two tracks into a stereo track.

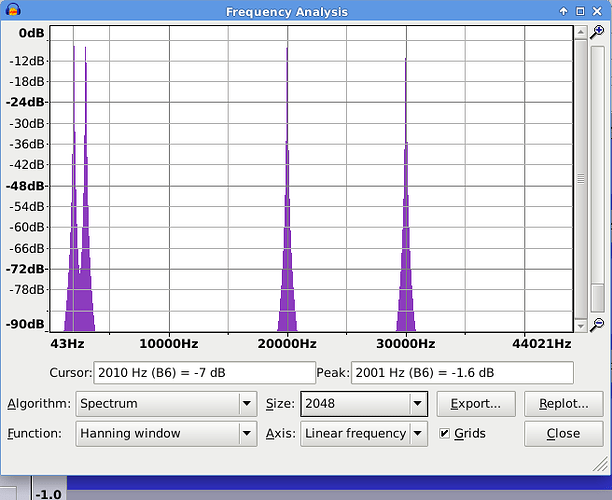

- Select all and look at the spectrum:

As expected, we have peaks at 2000, 3000, 20000 and 30000.

What happens if we modulate the left channel with the right channel? Amplitude modulation is equivalent to multiplication, and multiplying two sine tones will give us two new tones, one that is at the difference and the other at the sum of the two original frequencies. Thus what we would expect in the first track is:

2000 + 3000 = 5000 Hz

3000 - 2000 = 1000 Hz

and for the second track:

20000 + 30000 = 50000 Hz

30000 - 20000 = 10000 Hz

Let’s try doing this. Audacity does not have a built-in AM modulation effect, but it has a built-in scripting language called “Nyquist” which allows us to do almost anything (Missing features - Audacity Support).

- Select both tracks, then using the “Nyquist Prompt” effect (Nyquist Prompt - Audacity Manual), apply this code:

(mult (aref *track* 0) (aref *track* 1))

I’m assuming that you are using an up to date version of Audacity and that the “Use legacy (version 3) syntax” option is NOT selected.

The selected tracks are passed in turn to the Nyquist program and the code is applied.

In this code, track is the audio data from the selected track (tracks are processed one at a time).

(AREF TRACK 0) gives the left channel and (AREF TRACK 1) gives the right channel.

MULT is the command to multiply.

The result is passed back from Nyquist to Audacity and returned to the track. Because we are returning just one sound to a stereo track, Audacity copies the returned audio into both tracks.

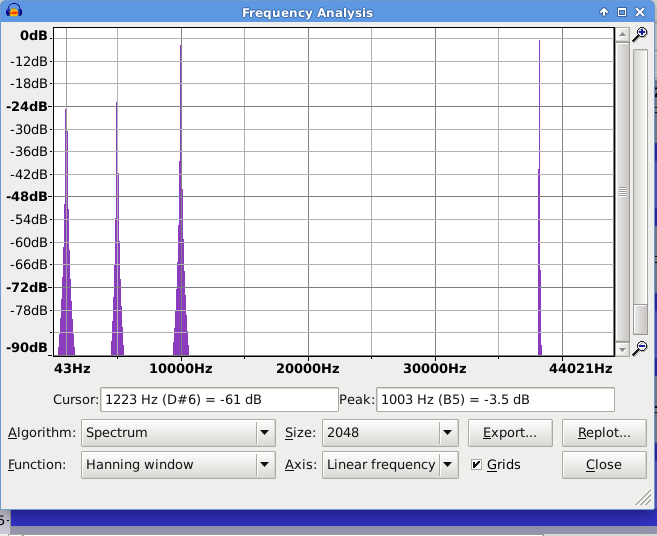

- Look at the result in Plot Spectrum:

As expected we have peaks at 1000 Hz and 5000 Hz, but in the second track we have peaks at 10000 Hz and 40000 Hz.

The thing that went wrong (40k instead of 50k) is that our track sample rate is ‘only’ 88200 Hz, which means that the maximum possible frequency that can be represented is 44100 Hz, but we have calculated sample values that “should” have a frequency of 50000 Hz. As shown previously, this new data is ambiguous; not only would 50000 Hz fit the generated sample values, but so will 40000 Hz. 50000 Hz is not allowed, so we get a peak at 40000 Hz instead, and that is aliasing distortion.

Moving on to the relevance of this to your question:

If you use a digital synthesizer that creates aliasing distortion in this way, then that is a fault / limitation of the synthesizer and there is nothing that you can do about it.

What the synthesizer code should do to ‘avoid’ this problem, is to use a sufficiently high sample rate internally (oversampling). It is not uncommon for high quality digital synths to oversample x8, that is, at 8 time the sample rate of the track, so for a 44100 Hz track, the synth could be working internally with sample rates as high as 352800 Hz.

I don’t know if amsynth uses oversampling. As it is intended for real-time use, it may not, in which case you can expect aliasing when generating high notes, but not a problem with bass notes.

Audacity can do either. it depends on how your audio system is set up.

If you are using ALSA as the host and “pulse” as the input / output device, then the audio is routed through PulseAudio at whatever sample rate PulseAudio is using (usually 44100 or 48000 Hz).

If you are using ALSA as the host and one of the “hw” options as input/output, then they connect directly to the sound card via ALSA and the sample rate will be whatever your sound card chooses.

If you are using Jack Audio System as the host, then you can choose whether to connect to physical inputs / outputs or directly to the in/out ports of any application that exposes jack ports (any audio software that supports Jack). The sample rate will be whatever sample rate Jack is running at, which must be a rate that is supported by your hardware.