There’s a fuzzy, unofficial rule that if you can pass ACX, you can submit anywhere else successfully. ACX has very strict guidelines that compare favorably to broadcast standards.

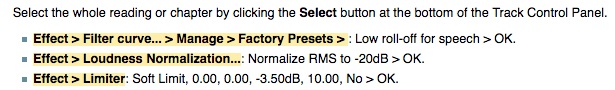

This is the short, graphic version.

Please note this is valid only in Audacity 2.3.3. If you’re using 2.4.1, the rules change a bit. Which Audacity are you using?

After Audiobook Mastering, I get everything passing easily with a background noise floor at -75dB. The standard is -60dB and the real-life recommendation is at least -65dB.

Check with the client, but voice work is many times submitted mono, with one blue wave instead of stereo. It cuts the transmission and storage time down by half at no loss of quality. If you read too loud and your waves go all the way up at any time, you could have permanent sound damage.

Tracks > Mix > Mix Stereo down to Mono.

Export an Edit Master as WAV or Save as Audacity Lossless Project. That’s your Archive.

ACX requires submission as lower quality, compressed MP3. You can’t edit those without sound damage once you make one, so any further work (edits, changes) should be to a copy of your archive, then make a new MP3. That’s not obvious.

ACX will allow you to submit a short voice test for evaluation. It’s a New User mistake to submit a whole book as a first effort. It’s not that unusual to burn permanent damage into the work and have to read it again. (I don’t think this will be a problem for you, but I’m not an inspector).

ACX Audition

Digression.

I was aiming to get my Foreground Contrast at around -22.0 dB. Setting levels has been 3-day battle.

You don’t need the battle. Nobody can read directly into ACX. The actual goal is to read so your wave peaks occasionally reach half-way or -6dB (depending on where you’re looking). Audiobook Mastering tools will take it from there. If you read too far off, you may not pass noise. That’s the evil lurking behind bad readings.

Your client may need other specifications. Your mileage may vary, consult your local listings.

Koz