Hi,

I’m attempting my first Audiobook having used these forums and played with Audacity for a few months.

I have a reasonably good setup, mic wise (though can still be beset a little by traffic noise in the middle of the day).

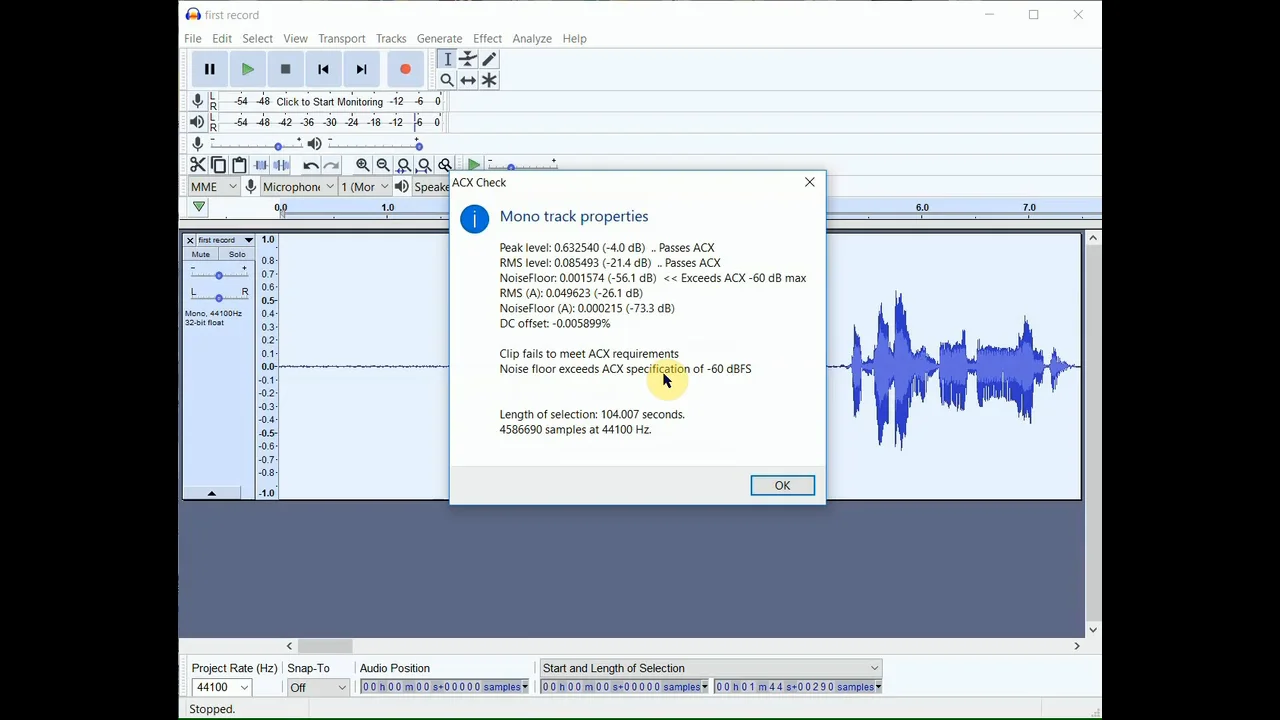

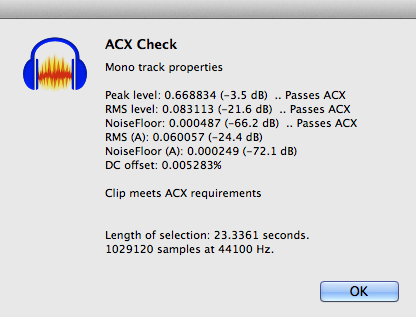

My recordings mostly pass the ACX test - but sometimes, after compression / normalisation, I find the background noise annoyingly (worse still, unevenly) over-amplified.

Now, I know there are good standard tools for helping out with all of these things, but I’m not sure which is the best order to run them.

I have hunted around to answers for various of my concerns on these forums. Quite often, the answers to one relevant query conflict with answers to another. So, I hope you don’t mind me putting all my queries into one post?

Please let me know if I should ask them separately.

-

Am I right in thinking that it’s best to run the standard filters mentioned here on my raw clips BEFORE my main edit (ie. deleting, cutting, splicing, etc).

-

Using Chris’s Dynamic Compressor

I heard much mention of this, tried it, and like it.

(Before that, I tried Levlator - which was good, but occasionally sounded distorted.)

It seems to preserve warmth well by adjusting the bass midrange and treble separately. Am I understanding that right?

Specific Compression questions:

a) RMS Normalize. Compress Dynamics seems to sort out RMS anyway, as part of compression — so do I even need to bother with running a Normalize filter separately? Or does that dedicated RMS Normalize filter do a subtler job?

If so — which is the latest version that you’d recommend?

b) I do find that the tail end of my paragraphs can fade out after Compress Dynamics (as though I were turning down the mic volume). Am I doing something wrong? — is that something that using RMS Normalize first would prevent?

— might the “Hardness” setting stop this (could someone explain to me how “Hardness” works - what it actually does to the sound?)

c) I am running the 1.2.6 version.

— Has anyone tried Compress Dynamics 1.2.7 (beta in 2012)? Does that work better?

Do the “compress bright sounds” or “boost bass” settings make a useful difference (or make it worse)?

d) Has anyone actually updated Chris’s Dynamic Compressor since his death?

Is there maybe a better tool now to recommend for all and sundry?

e) Noise Gate. I find that Compress Dynamics can boost background noise patchily — but that, when I use noise gate to prevent this, room-tone gets replaced with eerie muffled silence. Obviously, what I want is a natural room tone, which simply doesn’t get amplified when my voice does. How to keep the room tone without amplifying it? Would a zero setting on “Noise gate falloff” help — or does it actually need to be a positive number to prevent the room-tone being amplified?

-

So, Noise Gate filter. Since Compress Dynamics applies a noise gate, need I even bother with this dedicated filter? Does it perhaps give more subtle or controllable results?

If yes, should I run it before or after normalisation and compression? -

Noise Reduction.

Should I do this before or after Normalizing/Compressing?

Obviously, I want to keep this to a minimum so that my voice doesn’t get too artifacty (and natural breathing start to sound like Darth Vader). -

De-clicker

I don’t suffer too much from popping, mouth clicks and lipsmacks but, having been warned to watch out for these, I fear I may be becoming obsessive. My mic does, occasionally, introduce the odd tiny electronic “nit.” Should I worry about that? The de-clicker filter does an excellent job of eliminating almost all of these — but it can also muffle the 'b’s, 'p’s, 'd’s and, especially, 't’s that you want to hear at the beginning and ends of words. So, is it even neccessary? If so, is there any sense in going through the file and selectively declicking only the parts without consonants and with clicks/pops/smacks - or, if these were never too bad, is that (on an hour long chapter) just unnecessary hard work?

Is the slight loss of articulate consonants worth it just for ease, peace of mind, and a slightly more click free audiobook? OR, if the clicks aren’t too intrusive is better just to live with them and keep the clarity of speech? -

Lastly, LF Rolloff.

Should I just run this on all raw files right at the start?

I don’t much like it; it takes some warmth out of the voice

— but do tell me if everyone on ACX applies it as standard, so that my files would sound weirdly amateur if I don’t.

I’m grateful for anyone and everyone’s input on this.

If you have a standard chain that you follow, I would love to know the best order to apply the filters - and/or which ones I can afford to leave out.

Thanks for all your help.

Eric